The feedback loop

Neuroscientist Sliman Bensmaia investigates the somatosensory system to improve artificial limbs.

By Michael Knezovich

Photography by Dan Dry

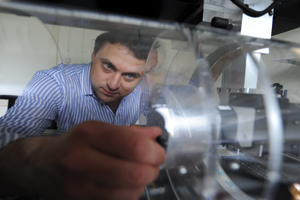

Bensmaia uses a custom-made instrument to run textures across human and primate subjects' fingers at different speeds and pressures.

Close your eyes and touch your index finger to the tip of your nose. Simple, right?

When you decide to shut your eyes and move your arm, the brain's motor cortex fires neurons that signal muscles to move. Your skin, muscles, and joints send messages to your brain that allow you-without consciously thinking about it-to know exactly where your arm is in space. You can gauge how fast and in what direction it's moving, when it's approaching your face, and when you should slow it down. You know when to curl your wrist and extend your finger, and, finally, when to make a gentle touchdown. The feedback mechanism generating the signals and sensations is your somatosensory system. Without it, you'd likely miss your nose entirely or, worse yet, break it.

Understanding how the somatosensory system works is "one of the basic questions of neuroscience," says Sliman Bensmaia, assistant professor of organismal biology and anatomy. It's also critical to making neuroprosthetics-brain-driven artificial limbs-a reality for amputees. Great strides have been made on the motor part of the problem. Biomedical engineers have already created prosthetics that mimic human arms, including the complex articulation of joints. Researchers are starting to understand relationships between activity in the motor cortex and limb movements. Right now, for example, it's possible for an amputee to move a neuroprosthesis by thought. A chip reads impulses from the brain that signal the native limb to move and those impulses are translated to activate the artificial limb.

Without the sensations provided by the somatosensory system, however, controlling the sophisticated prostheses takes a painstaking effort, akin to the arcade crane games where you use a joystick to score a teddy bear. An amputee must concentrate intensely, maneuvering the arm mostly by sight, which makes for clumsy, imprecise movement. "It's a very unnatural thing," says Bensmaia. Patients are "using a visual system to guide it, but you actually use your somatosensory system to guide your arm."

Bensmaia began studying these mechanisms as a PhD student at the University of North Carolina at Chapel Hill. After completing his cognitive psychology PhD, with a minor in neurobiology, he did postdoctoral work at Johns Hopkins University, and then came to Chicago in July 2009.

His current project explores sensory motor control by focusing on proprioceptive feedback, or limb state, the brain mechanism used to track where fingers, toes, and limbs are at any given moment. Right now, Bensmaia says, no one quite knows how individual neurons communicate that information to the brain.

To build an understanding, he works with rhesus macaque monkeys, whose somatosensory and motor systems are very similar to those of humans. "We're having the monkeys do a lot of things using their hands." That includes "tracking their hands using the same kind of motion tracking system they used to make The Lord of the Rings," says Bensmaia. The monkeys wear reflective markings on their hands, elbows, and shoulders. Ten infrared cameras track their motion in three dimensions while microchips implanted in the monkey's brain record somatosensory and motor cortices' movement. "By recording the activity that is evoked in both cortices," he explains, "we'll be able to see how these two areas interact with one another."

In April, he and his coresearchers, Chicago neuroscientist Nicholas Hatsopoulos and Northwestern neuroscientist Lee Miller, launched a study to correlate what's going on in the monkeys' brains moment to moment. Using probability mathematics, they zero in on which neuron-firing patterns are associated with which actions. The investigation will pinpoint not only which neurons are firing, but also the type and tempo of signals they're sending. From there, they can reverse engineer and create predictive algorithms that may eventually be paired with neuroprosthetics to simulate limb-state awareness.

"Our approach is very simple-minded in a way," Bensmaia says. "What we do is say we're going to understand at a mathematical level what happens in the native limb, and we're going to replicate it."

He used a comparable process to discover that the mechanism that provides our tactile sense of motion-how we feel a coffee cup begin to slip from our hand, for example-is very similar to the visual system for detecting motion. In the visual cortex, it's well established that some neurons fire only in response to motion, not to other stimuli such as color or shape. In a paper published in the February PLoS Biology, Bensmaia reported that some somatosensory neurons respond a similar way-exclusively to tactile motion.

For that study, Bensmaia implanted a microchip in the brain of a rhesus macaque and recorded activity as several bar and dot patterns were moved across the monkey's fingertips. Using that data, he developed and refined algorithms that accurately predict neuron-firing patterns associated with tactile motion. One day, he says, artificial mechanoreceptors-sensors in the skin that signal tactile motion-could be placed on the fingertips of a neuroprosthesis. The algorithms could be programmed into a microchip that would send the brain the same kind of signals that native fingertips provide.

The ultimate goal? Bensmaia turns to his computer and clicks to a clip from the movie The Empire Strikes Back. It shows Luke Skywalker trying out his high-tech prosthetic hand. In that scene, his new fingers are pricked with a pin-and Skywalker feels everything. "That's what we're shooting for."

Return to top