|

|

| More Than Meets the Eye

Although the eye is vision’s front door, the brain creates perception. Researchers want to reveal how the mind translates light into visual experience White boards, splattered with red, green, and blue equations, cover the walls. Computers crowd paper-stacked desks. Programming manuals line bookshelves. In offices that resemble the habitats of their mathematics or computer-science colleagues, neurobiologists, ophthalmologists, and psychologists investigating visual perception wield mathematical and computational models not to describe the physical world, but rather to decipher how it’s seen. In laboratories spread across campus, they have projects under way to unlock the inner workings of the relationship between the eyes, brain, and perception—the process of interpreting sensory information. Exploring both high- and low-level functions, from memory to motion, their studies may even lead to a cure for blindness. “The visual system is not at all like a camera,” says Steven Shevell, a professor in psychology and ophthalmology & visual science, dispelling a popular analogy. Going far beyond what a camera does, the visual system “represents objects in meaning.” The system activates when light waves reflect off objects and enter the eye. Passing through the cornea, light reaches the retina, where rods and cones convert it into electrical impulses. Those impulses travel along the optic nerve, a mass of ganglion cells, to the back of the brain and the primary visual cortex—perception’s powerhouse. From there, messages circulate to other regions and, presto, a three-dimensional, colorful mental image surfaces. Scientists trying to break down the events that lead to visual experience have a lot of ground to cover. “Just seeing an object, for example, causes many thousands of cortical neurons to become active,” Chicago neurophysiologist David Bradley notes, “and their activities together create and shape what we perceive. But how are these activities understood, or ‘read out’ by the brain? If we could actually measure all these activities, would we know what perception was?”

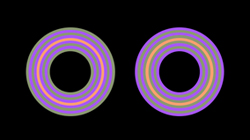

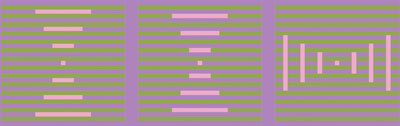

Seeing is believing, right?” jokes Shevell, 54. Not exactly: “We do not have an accurate representation of physical reality,” he explains. The properties that make up what people “see”—color, orientation, texture, depth, motion, size, location—are at the mercy of both the eyes and the brain. “The brain,” for its part, “makes an educated guess,” he says. “The driving force behind our research is trying to understand how our natural world is represented perceptually.” For starters, color—his area of expertise—is a mental, not physical, phenomenon. To illustrate his point, Shevell, dressed in basic black, offers a quick neighborhood tour on a July-hot April day. “Sometimes it just strikes me,” he says, turning toward an evergreen on 57th Street outside Snell-Hitchcock Halls. “You think that direct sunlight is shining” on the shrub’s tips, but it’s “the lighter color of new buds. The actual color we perceive—either a different color at the tips or the whole plant as one color—is a mental process in which the visual system interprets the light.” Shevell, no one-trick pony, continues the tour. Fishing a white plastic bag out of a nearby trash can, he holds it behind a bunch of pale yellow daffodils, and suddenly the flowers seem more yellow. The eye and brain sharpen the perceived difference, he explains, making the color stand out. Such sharpening, he says, “allows you to see differences among things that are close by.” For instance, the contrast between dark ruby cherries and their yellowish counterparts helps pickers discern good fruit from bad. Landscapers also play off the phenomenon—say by putting pale pink flowers next to white ones—so certain colors pop. “Monet understood,” Shevell notes, and “he painted his garden at Giverny in contrasting hues.” The brain’s control over what people see has commercial applications too. Grocery-store owners often install track lighting above apples to create the illusion of a redder fruit, Shevell says, and restaurateurs know that stationing a tungsten lamp over carved roast beef produces a “nice, red, and juicy” look. To unriddle those gimmicks, he harks back to high-school physics, dismantling ROY G BIV, the mnemonic device to learn the color spectrum (red-orange-yellow-green-blue-indigo-violet). Humans only “perceive light that way,” he says. In fact, “light has a wavelength, not a hue,” so color represents the brain’s responses to light of varying wavelengths. Further, like in the daffodil example, an object can look different depending on its context, a phenomenon known as chromatic induction. “The neural representation of a particular area [of a scene] is influenced not only by the light in that area,” Shevell says, “but also by the light throughout the scene.” Back in his Biopsychological Research Building office, he whips out another illustration: a white piece of paper with four rings printed on a background of purple and lime circles. The rings appear multiple shades of orange, but they’re actually identical, the same ink. To account for the false impression, Shevell and Florida Atlantic University’s Patrick Monnier theorized that a particular type of neuron in the primary visual cortex—responding exclusively to impulses from the retina’s short-wavelength cones—causes shifts in color appearance with patterned backgrounds composed of two chromaticities. “We’ve revealed a new kind of neuron in the brain,” he says, “and now that we understand the way it works we know it can lead to remarkably big errors,” as with the rings. After they published their theory in the August 2003 Nature Neuroscience, neuroscientists reported finding a similar cell in macaque monkeys. “Vision is so automatic to us,” Shevell says, offering up a modern analogy: “When you push the buttons on your cell phone, you don’t think about the technology that makes it work.”

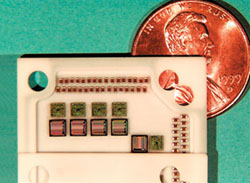

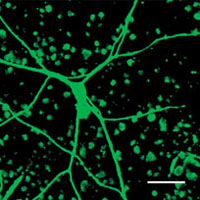

Husband-and-wife team Joel Pokorny, 63, and Vivianne Smith, 65, have been thinking about the technology that makes color vision work since meeting as graduate students at Columbia University. The duo, sitting at face-to-face desks in an office just around the corner from Shevell’s, come at their subject from the front end. “We study the retina. We’re kind of old-fashioned,” says Smith, professor in ophthalmology & visual science. “It’s OK, we’ve always been old-fashioned,” retorts Pokorny, a professor in ophthalmology & visual science and psychology. Concentrating on the eye may be old-fashioned, but their findings are not. Their latest research centers on a newly discovered ganglion cell that helps the eye adjust to changing light levels. Pokorny and Smith are part of a multi-institutional team that first reported on the cell at the Association for Research in Vision and Ophthalmology’s 2003 conference. “When you walk into a movie late, you first see nothing, then you adapt and see how to find a seat,” Smith explains. “This ability ensures good vision at all light levels.” Some retinal diseases can affect that adaptation, and though such diseases may be linked to the cell, she says, nothing specific has emerged yet. Unlike its ganglion counterparts, the MOP cell, so called because it contains a photopigment believed to be melanopsin, can signal light’s presence without receiving impulses from the rods and cones. Textbooks don’t yet cover this “rare bird,” Pokorny says. Give them a year or two, Smith guesses. For now, the pair is preparing an article on the cell, which has been identified in zebra fish, rats, and mice. Although Smith and Pokorny haven’t decided on an approach, they plan to probe how it functions. Other retinal-based projects, meanwhile, including investigating the 20 or more visual pathways that begin in the retina, keep them busy. “These pathways,” a research abstract explains, “send their messages in parallel through the optic nerve to the brain. In the brain there are a similar number of distinctive brain structures that receive this retinal alphabet. The nature of what the eye tells the brain is thus much more complex than expected.” They want to identify those pathways, from starting point to finish—bringing them to the brain. “The brain,” Smith concedes, “that is where there’s a big push.” Numbers justify the push: about one-third of the human brain is devoted to some aspect of seeing, Shevell notes. But much about processing sight remains unknown. “To me, brain research resembles physics in the early 20th century,” says Bradley, an assistant professor in psychology. “People had to get used to concepts,” such as special relativity and quantum theory, “before they knew what questions to ask. To really understand the brain, you have to take your imagination in new directions.” Imagine, for instance, a device that restores sight to the blind. Bradley has—and with a multi-institutional team of scientists, including Robert Erickson, associate professor in neurosurgery, and Vernon Towle, professor in neurology and surgery, he’s working on making the concept a reality. Because the ma-jority of blind patients have damaged eyes or optic nerves, some researchers try to induce sight by focusing on the eye. But Bradley and his colleagues have gone straight to the brain. “Most blind patients could potentially see if you stimulate the brain directly,” he argues. “The brain’s language is electrical pulses.” To arrive at a human-ready prosthesis—Bradley hopes within the next five to ten years—the scientists must clear both technological and biological hurdles. As the Illinois Institute of Technology, a collaborator on the project, explains on its Web site, success “depends upon providing the cortex with a well-controlled...stimulation pattern that mimics the pattern of neural activity normally associated with vision.” Providing that pattern represents a major challenge because “it is likely that a large number, at least hundreds, of parallel channels of stimulation are required.” The team has made electronic and surgical strides, developing an implantable device including a stimulator, powered by a wireless link through a computer system, and 100 to 300 microscopic wires, or electrodes. The quarter-sized circuit board is filled with computer chips and wire connections. “My job is to figure out how to communicate with the brain with these things,” says Bradley, 43. The group is currently testing the technology with rhesus monkeys, selected for their trainability and humanlike visual system. “We’re approaching it scientifically,” he says in his deliberate style, “taking it step-by-step.” While the visual prosthesis is a work in progress, another Bradley venture has reached fruition after four years. “That project was so hard we’re still panting,” he laughs, pausing to refuel from a Diet Pepsi can. In September 2003 his team, known as BradLab, recorded a behavioral change in neurons when perception shifts. Displaying computer-screen images of moving patterns, morphing from two objects to one and back again, the researchers trained three rhesus monkeys, using a liquid-reward system, to indicate what they saw. Implanted electrodes measured their brain activity. “Neurons pulsate kind of like the Morse Code,” Bradley explains. When the monkeys perceived a single moving object, activity condensed in one place. With a pair of objects, two clusters of activity thrummed. “Knowing what the monkeys perceived, and knowing how the neurons responded,” he notes in a research description, “we can begin to piece together what is referred to as the neural code”—perception’s road map. The project, like most of Bradley’s work, demands dabbling in diverse disciplines, a fact reflected in his Green Hall office. A developer’s guide to database applications sits on his desk alongside multiple computers. Formulas decorate a wall. Math, he notes, is key. “We create models that try to imitate the behavior of the brain,” he says. “When you imitate something you learn a lot about it.” For example, BradLab postdoc Gopathy Purushothaman used a model to prove his hypothesis suggesting which mechanisms cause neurons to gain sensitivity when a subject catches on to a task—in this case, a monkey judging the direction of a moving pattern. “What’s missing in vision research,” Bradley contends, “is a mathematics that has a similar function” as calculus has to astronomy. “Many of us hope that one day a simple principle will be discovered that explains how such extraordinary” computation occurs—how the brain encodes a complex world. In the meantime, without a numerical shortcut to understanding the visual system, researchers must navigate its myriad paths.

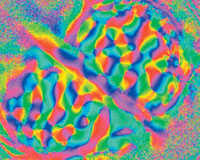

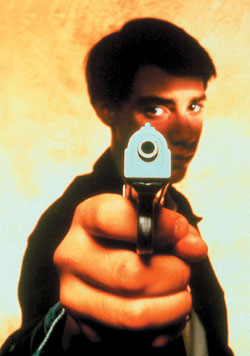

Naoum Issa, assistant professor in neurobiology, pharmacology, and physiology, follows those paths to where the primary visual cortex processes detailed information. For 36-year-old Issa, working in the trenches is more than a state of mind. “It’s tough to find my office if you haven’t already been there,” he warns in an e-mail. Indeed, down in the Surgery Brain Research Institute’s labyrinthine basement, his headquarters elude most visitors. He makes a habit of meeting them at the ground-floor entrance. Issa takes a similar approach to explaining his research, delving deeper only after walking through the first level step-by-step. “To me the coolest thing is understanding how information is stored in the visual system, how it’s moving from place to place, and what kind of math is being done at each step,” he says, ultimately narrowing his focus to “how the brain limits our ability to see well.” To lay bare the mechanisms underlying that limitation, Issa investigates two situations where visual acuity, “a measure of how small a detail you can see,” suffers. The first, amblyopia, a dimming of sight in one eye, affects nearly 3 percent of the U.S. population. Because the disorder “persists even after correcting physical problems with the eye, it is a pathology of the central nervous system,” his lab’s Web site explains. “Understanding how spatial information is disrupted in the amblyopic brain is therefore central to understanding this problem.” To study what goes wrong in the primary visual cortex, he shows amblyopic cats a moving bar on a computer screen, through both their good and bad eyes, and compares the brain’s response. “We predict that specific domains of the visual cortex are affected more profoundly by amblyopia than are other areas.” The second case involves dynamic acuity, an aspect of motion perception that shifts as objects speed up or slow down. “It turns out the eye can follow a fast change in light,” he says. “The brain can’t. It throws away that information. Presumably it’s doing that for a reason.” For example, “if you look at a stationary train, you can see all the detail,” he notes. “But when the train’s moving quickly, it blurs.” As a postdoc at the University of California, San Francisco, Issa mapped where a cat’s brain represents such “big” (the train) and “very fine” (the detailed exterior) information, called low and high spatial frequencies, reporting his findings in the November 2000 Journal of Neuroscience. Expanding that work, he has returned to cats watching a moving bar, this time at different speeds, recording their neural activity. The data indicate that as speed goes up, activity increases in the low spatial frequency domain and decreases in the high. Bingo. He has his subregions—in the primary visual cortex’s frontmost section. “Nobody knew where it happened. We’re showing it now,” he exclaims in an April phone call. Pop music plays in the background. Next up: determining “what properties of the brain cause that to happen.” With results in hand, Issa takes a step back. “The real goal is to understand how images are represented in the brain,” he says. “The field has done a good job of representing how the brain represents a static image. The next challenge is how the brain deals with changing images.” When the conversation turns to changing images, memory inevitably comes up. Enter Vera Maljkovic, assistant professor in psychology, for whom something remembered is a professional calling. “I was always on the verge between the visual stuff and higher-level things like memory,” Maljkovic, 41, says from Green Hall’s Visual Perception Laboratory. “Memory is extraordinarily complex because it’s any change in the mind that is the result of some experience. It’s just this enormous amount of information we store about everything.” Narrowing her scope, she investigates visual memory—“matching aspects of what you see to the information in your head”—in the short-term, a matter of mere seconds. Her studies explore the steps people take to understand their surroundings and to make decisions. “The entire process depends on memory,” she explains. “You cannot really comprehend anything unless you have a memory to begin with. You have to have a knowledge base already in your mind.” People rely on such knowledge when faced with competing visual stimuli as they execute saccades—the quick eye movements “we use most of the time to look around,” as the lab’s Web site puts it. To receive data, humans turn either their attention—the brain’s focus—or their gaze toward what interests them. “The two behaviors are tightly related,” the Web site explains. But the mind is a step ahead. “It is in fact attention that shifts to the target first, thereby directing the eye movement to a particular point in space.” Attention also has a stake in what gets remembered, according to Maljkovic’s latest research. “The things that go into memory are those things we attend to, that attract our attention,” she says, breaking down the connection: “Every time you see something, if you pay attention, it leaves a memory trace.” Testing the mechanism, she timed how long it took human subjects to identify the irregular shape in a trio. As patterns repeated, the responses got faster and faster. The memory trace helps “us know where to look next,” she says. “You can pay attention to relevant things and you’re faster.” In another experiment on “things happening quickly over time,” Maljkovic moves into muddier territory: comprehending a scene. “Picture memory is far more complex because there are many objects, and you have to recognize their relationships and understand some emotional significance within those relationships,” she notes. Yet “people can comprehend scenes in a remarkably short amount of time.” To measure that ability, she showed subjects a series of 384 color photographs at different durations, from 13 milliseconds to two seconds per image, and recorded how fast they grasped a positive, negative, or neutral meaning. The photos included a man swigging liquor from the bottle, an astronaut floating in outer space, a teenager with a pointed gun, and a woman nursing an infant. Subjects correctly categorized more than 20 percent of the images at the shortest intervals, and with additional time the percentage increased. At 200 milliseconds they had near-perfect scores. Such proficiency demonstrates, Maljkovic concludes, that “we understand the emotional content of an image within a single glance.” Teaming with Dartmouth computer scientist Hany Farid, she took her finding a step further. Farid constructed a computational model that categorized several of the positive and negative photographs by contrast, orientation, and spatial frequency. Using that data, he then asked the computer to predict an unfamiliar photo’s meaning. The computer generally guessed wrong, suggesting that the subjects needed more than purely visual clues to understand a scene. It “seems that people comprehend the image based on memory,” she says, “and then they decide its meaning.” It’s another complex function of visual perception yet to be understood. Maljkovic’s attempts at quantifying memory dot a white board in her office—models and formulas that suggest seeing is a numbers as well as a mind game. “We don’t think vision is hard,” she admits, “because we do it. So what’s the big deal? It is easy, but the machinery is so complex.” Along with Chicago’s other visual perception researchers, she’s trying to describe the machinery in a way that make seeing as simple as it looks.

|

|

phone: 773/702-2163 | fax: 773/702-8836 | uchicago-magazine@uchicago.edu